Sending logs directly to GCS using Cloud Logging fluentd

2020-07-20A couple weeks back i was looking at how someone could send non-sensitive logs directly to google without having to use the Logging API. The recommended way to send logs to GCS using cloud logging fluentd agent is to..well, just use it as is and setup a log router rule.

The problem with using a log router is each log line needs to go through the Logging API first and that is subject to several quota including size limits.

What if we could still use fluentd but bypass the logging plugin emit logs GCS as a standard LogEntry? That way, you can use fluentd on a VM for all your critical, time sensitive logs via the API but for other log types, “just send them” preformatted to GCS.

As mentioned, if the agent can save the logs in LogEntry format, that happens to be more or less what a log router sink saves any log line it would have by itself to GCS or BQ. THis means if we upload a log in a standard format, it can be processed uniformly via DataFlow or other ETL back into BigQuery.

It turns out… @daichirata already wrote the raw fluentd->GCS exporter here!:

However, what that plugin does is exports a log file as-is to GCS and what we need to do is locally preprocess the log line into a LogEntry and then emit that part. Thats actually pretty easy enough to do with some small (unsupported!) modifications to google-fluentd plugin.

Specifically, we need to process a log line with google fluentd, use it to crate a LogEntry and save that logEntry in bulk to a file. From there, the fluentd->gcs plugin will read the file and upload to GCS. If the google-fluentd’s filter is not matched that would otherwise write to a file, that log resource will still go directly to GCP via the logging API.

Again, to emphasize, the procedure will use an unsupported modified addition to google fluentd output plugin

Setup

Create a GCE VM

Enable storage.write and logging.write IAM permissions. GCE VM’s service account should have write capability to a GCS bucket where the logs will get sent to

Install standard google-fluentd

curl -sSO https://dl.google.com/cloudagents/add-logging-agent-repo.sh

sudo bash add-logging-agent-repo.sh

sudo apt-get update

sudo bash add-logging-agent-repo.sh

sudo apt-get install google-fluentd wget

sudo apt-get install -y google-fluentd-catch-all-config-structured

Install fluent-plugin-gcs

sudo /opt/google-fluentd/embedded/bin/gem \

install google-cloud-storage fluent-plugin-gcs

Apply google-fluentd patch

Apply fluentd config file

Which logs you send to GCS or to Logging API is dictated by the fluentd config file.

In the sample below, all syslog files are transmitted to a GCS called mineral-minutia-820-logs every hour

/etc/google-fluentd/google-fluentd.conf

My default conf file is here for ref

Restart fluentd

service google-fluentd restart

Write a log file and wait..get a coffee since we said it’d take an hour

logger hello

logger world

follow the log file

tail -f /var/log/google-fluentd/google-fluentd.log

Note, the log lines for GCS are just buffered at the moment; they are not sent to GCS…so wait

Eventually, you’ll see a line like this from the GCS plugin that indicates the file was transmitted out

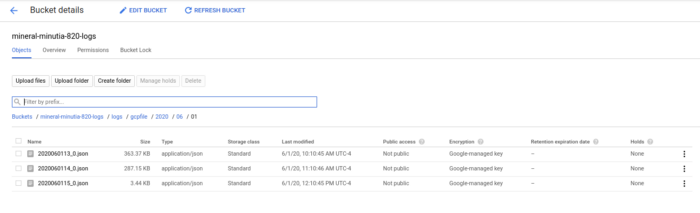

and in the GCS bucket, you should see hourly log files in JSON

where each file is each syslog entry over the hour

BQ Parsing

The final goal here is to do analytics but there’s a problem with importing the logs as is which i didint’ figure out:

The log lines above are not in a format that is readily importable to BQ for a silly reason:

The resource names in the file are not escaped correctly.

What i mean by that is a JSON filed name "compute.googleapis.com/resource_name" needs to get escaped into labels.compute_googleapis_com_resource_name before it can be imported to BQ

You can either nodify the fluentd-gcs plugin (preferable) or post process the log files, (ugh!)

google-fluentd from onprem or unsupported platforms

This article covers how to set this up on GCP VMs since google-fluentd is not supported on arbitrary platform. To that, there is technically nothing preventing you from using google-fluentd itself to emit logs from arbitrary platforms since its just an API call. If you are interested in using google-fluentd for Logging API and GCS, you will first need to start off with a fork of fluentd described here Writing Developer logs with Google Cloud Logging and my fork of https://github.com/salrashid123/fluent-plugin-google-cloud

Other fluentd plugins from our sponsors…

This site supports webmentions. Send me a mention via this form.