Knative Traffic Splitting

2020-01-08Sample getting started tutorial on how to do traffic splitting with Knative Serving using Istio.

This is just my “hello world” application demonstrating stuff here:

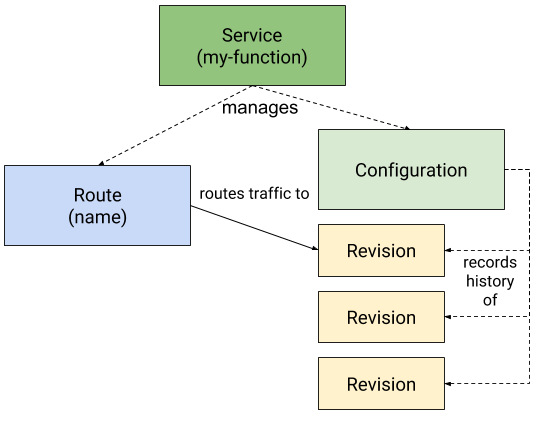

While you can easily follow those tutorials, this article specifically designed to deconstruct the basic knative serving objects (Services, Congigurations, Routes, Revisions) and how to use them to split traffic.

Note: GCP Cloud RUn does not yet suport

tags

We will be showing traffic splitting using

(A) Services

- Deploy Service (v1)

- Creates Revision

istioinit-gke-0001 - 100% traffic goes to

istioinit-gke-0001

- Creates Revision

- Create Revision

istioinit-gke-0002- Update Service and split traffic between Revisions

(B) Confiuration and Routes

- Deploy Configuration for (v1) and a Route to that Revision

- Creates Revision

istioinit-gke-0001 - 100% traffic goes to

istioinit-gke-0001

- Creates Revision

- Create Configuration for (v2) and Revision

istioinit-gke-0002- Update Route and split traffic between Revisions

The application i’ll be using is the handy one i used for various other tutorials that just returns the ‘version’ number (a simple 1 or 2 denoting the app verion that it hit). We will use the version number as the signal to confirm the routing scheme we intended to specify got applied.

You can find more info about the app istio_helloworld…the endpoint we’ll use is just /version.

Setup

- Install Istio, Knative on GKE.

We will not be using GKE+Istio Addon or Cloud Run; the installation of Istio and Knative is done by hand as if its installed on k8s directly (Knative with any k8s)

Install GKE and Istio

gcloud container clusters create cluster-1 --machine-type "n1-standard-2" --cluster-version=1.16.0 --zone us-central1-a --num-nodes 4 --enable-ip-alias

gcloud container clusters get-credentials cluster-1 --zone us-central1-a

kubectl create clusterrolebinding cluster-admin-binding --clusterrole=cluster-admin --user=$(gcloud config get-value core/account)

kubectl create ns istio-system

export ISTIO_VERSION=1.3.2

wget https://github.com/istio/istio/releases/download/$ISTIO_VERSION/istio-$ISTIO_VERSION-linux.tar.gz

tar xvzf istio-$ISTIO_VERSION-linux.tar.gz

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz

tar xf helm-v2.11.0-linux-amd64.tar.gz

export PATH=`pwd`/istio-$ISTIO_VERSION/bin:`pwd`/linux-amd64/:$PATH

helm template istio-$ISTIO_VERSION/install/kubernetes/helm/istio-init --name istio-init --namespace istio-system | kubectl apply -f -

helm template istio-$ISTIO_VERSION/install/kubernetes/helm/istio --name istio --namespace istio-system \

--set prometheus.enabled=true \

--set sidecarInjectorWebhook.enabled=true > istio.yaml

kubectl apply -f istio.yaml

kubectl label namespace default istio-injection=enabled

At this point, you’ll have a GKE cluster with Istio running. You can verify everything is ok by running:

$ kubectl get no,po,rc,svc,ing,deployment -n istio-system

Acquire the GATEWAY_IP allocated to istio-ingress

$ export GATEWAY_IP=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

$ echo $GATEWAY_IP

Install Knative

We’ll instlal Knative on top of Istio now:

Follow the instructions detailed here Install on a Kubernetes cluster

A) Traffic Splitting with Services

First lets deploy a Service by itself.

$ kubectl apply -f service_1.yaml

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0001

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:1

This will automatically create a Configuration, Revision and a Route all for you:

$ kubectl get ksvc,configuration,routes,revisions

NAME URL LATESTCREATED LATESTREADY READY REASON

service.serving.knative.dev/istioinit-gke http://istioinit-gke.default.example.com istioinit-gke-0001 istioinit-gke-0001 True

NAME LATESTCREATED LATESTREADY READY REASON

configuration.serving.knative.dev/istioinit-gke istioinit-gke-0001 istioinit-gke-0001 True

NAME URL READY REASON

route.serving.knative.dev/istioinit-gke http://istioinit-gke.default.example.com True

NAME CONFIG NAME K8S SERVICE NAME GENERATION READY REASON

revision.serving.knative.dev/istioinit-gke-0001 istioinit-gke istioinit-gke-0001 1 True

We can now send it some traffic

$ for i in {1..1000}; do curl -s -H "Host: istioinit-gke.default.example.com" http://${GATEWAY_IP}/version; sleep 1; done

11111111111

What the simple 1 there signifies is the version of the application we’ve deploy (which is version 1 since we deployed just gcr.io/mineral-minutia-820/istioinit:1).

A Service by itself needs to target traffic towards a revision…but so far we only have one revision. Lets deploy a revision for version 2 of our application. That is, a revision that has gcr.io/mineral-minutia-820/istioinit:2:

apiVersion: serving.knative.dev/v1

kind: Configuration

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0002

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:2

imagePullPolicy: IfNotPresent

$ kubectl apply -f config_2.yaml

$ kubectl get ksvc,configuration,routes,revisions

NAME URL LATESTCREATED LATESTREADY READY REASON

service.serving.knative.dev/istioinit-gke http://istioinit-gke.default.example.com istioinit-gke-0001 istioinit-gke-0001 True

NAME LATESTCREATED LATESTREADY READY REASON

configuration.serving.knative.dev/istioinit-gke istioinit-gke-0001 istioinit-gke-0001 True

NAME URL READY REASON

route.serving.knative.dev/istioinit-gke http://istioinit-gke.default.example.com True

NAME CONFIG NAME K8S SERVICE NAME GENERATION READY REASON

revision.serving.knative.dev/istioinit-gke-0001 istioinit-gke istioinit-gke-0001 1 True

revision.serving.knative.dev/istioinit-gke-0002 istioinit-gke istioinit-gke-0002 2 True

We’ve got two revisions but the traffic for the Service is still going version 1.

To apply a 50/50 split on the Service itself, we need to add in the traffic section describing the targets

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0002

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:2

traffic:

- revisionName: istioinit-gke-0001

percent: 50

- revisionName: istioinit-gke-0002

percent: 50

Apply the config:

$ kubectl apply -f service_12.yaml

At this point, the frontend service traffic should be split evenly-ish:

$ for i in {1..1000}; do curl -s -H "Host: istioinit-gke.default.example.com" http://${GATEWAY_IP}/version; sleep 1; done

1222122222212212221121112122111

Wonderful! we’ve used Services to split traffic. Lets rollback the config so we can use the Configuration and Revision directly:

kubectl delete -f config_2.yaml -f service_12.yaml

Verify:

$ kubectl get ksvc,configuration,routes,revisions

No resources found.

# you may have to wait a couple of mins for the pods to terminate; this is somthing up w/ the app i have

$ kubectl get po

No resources found.

Traffic Splitting with Configurations and Revisions

Now Deploy a config and route to that config for version 1:

cd config_revision/

$ kubectl apply -f config_1.yaml -f route_1.yaml

apiVersion: serving.knative.dev/v1

kind: Configuration

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0001

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:1

imagePullPolicy: IfNotPresent

---

apiVersion: serving.knative.dev/v1

kind: Route

metadata:

name: istioinit-gke

namespace: default

spec:

traffic:

- revisionName: istioinit-gke-0001

percent: 100

We didn’t deploy a service but an endpoite-route does get setup for you:

$ kubectl get ksvc,configuration,routes,revisions

NAME LATESTCREATED LATESTREADY READY REASON

configuration.serving.knative.dev/istioinit-gke istioinit-gke-0001 istioinit-gke-0001 True

NAME URL READY REASON

route.serving.knative.dev/istioinit-gke http://istioinit-gke.default.example.com True

NAME CONFIG NAME K8S SERVICE NAME GENERATION READY REASON

revision.serving.knative.dev/istioinit-gke-0001 istioinit-gke istioinit-gke-0001 1 True

Which means we can send traffic to it just as before:

$ for i in {1..1000}; do curl -s -H "Host: istioinit-gke.default.example.com" http://${GATEWAY_IP}/version; sleep 1; done

11111111

Now deploy a configuration for version 2:

apiVersion: serving.knative.dev/v1

kind: Configuration

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0002

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:2

imagePullPolicy: IfNotPresent

As before we’ve got a new revision but traffic still goes to 1

$ kubectl get ksvc,configuration,routes,revisions

NAME LATESTCREATED LATESTREADY READY REASON

configuration.serving.knative.dev/istioinit-gke istioinit-gke-0002 istioinit-gke-0002 True

NAME URL READY REASON

route.serving.knative.dev/istioinit-gke http://istioinit-gke.default.example.com True

NAME CONFIG NAME K8S SERVICE NAME GENERATION READY REASON

revision.serving.knative.dev/istioinit-gke-0001 istioinit-gke istioinit-gke-0001 1 True

revision.serving.knative.dev/istioinit-gke-0002 istioinit-gke istioinit-gke-0002 2 True

To split traffic, we’ll update the the route now to send 50/50 traffic split:

apiVersion: serving.knative.dev/v1

kind: Route

metadata:

name: istioinit-gke

namespace: default

spec:

traffic:

- revisionName: istioinit-gke-0001

percent: 50

- revisionName: istioinit-gke-0002

percent: 50

---

Then Verify:

$ for i in {1..1000}; do curl -s -H "Host: istioinit-gke.default.example.com" http://${GATEWAY_IP}/version; sleep 1; done

2111111221222212222112

Thats it, this is a small snippet that shows traffic splitting w/ knative (well…atleast ast of 1/9/20).

You can find the yaml on this git repo here

good luck.

config_1.yaml

apiVersion: serving.knative.dev/v1

kind: Configuration

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0001

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:1

imagePullPolicy: IfNotPresent

config_1.yaml

apiVersion: serving.knative.dev/v1

kind: Configuration

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0002

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:2

imagePullPolicy: IfNotPresent

config2.yaml

apiVersion: serving.knative.dev/v1

kind: Configuration

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0002

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:2

imagePullPolicy: IfNotPresent

service1.yaml

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0001

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:1

service12.yaml

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: istioinit-gke

namespace: default

spec:

template:

metadata:

name: istioinit-gke-0001

spec:

containers:

- image: gcr.io/mineral-minutia-820/istioinit:1

traffic:

- revisionName: istioinit-gke-0001

percent: 50

- revisionName: istioinit-gke-0002

percent: 50

This site supports webmentions. Send me a mention via this form.